A logarithm is a mathematical function that represents the inverse operation to exponentiation. It helps solve equations of the form “x raised to the power of y equals z,” where the logarithm seeks to find the value of y when given x and z. In other words, it helps you find the exponent (power) to which a given base (x) must be raised to obtain a given result (z).

Logarithms have several important properties, including:

- log(x)(1) = 0: Any number raised to the power of 0 equals 1, so the logarithm of 1 to any base is 0.

- log(x)(x) = 1: The logarithm of a number to the same base is always 1, as x^1 = x.

- log(x)(x^y) = y: The logarithm of a number raised to a power (x^y) is equal to the exponent y.

- log(x)(y * z) = log(x)(y) + log(x)(z): The logarithm of a product is the sum of the logarithms of the factors.

- log(x)(y / z) = log(x)(y) – log(x)(z): The logarithm of a quotient is the difference of the logarithms of the dividend and the divisor.

In the context of algorithm analysis, the logarithm is used to express the time complexity of certain algorithms. When you see an algorithm with a time complexity of O(log n), it means that the algorithm’s running time increases in proportion to the logarithm of the input size (n) rather than linearly with n itself.

The logarithm base used in O(log n) is typically not specified because, for algorithm analysis, the base of the logarithm does not affect the overall growth rate of the function and thus does not impact the big O notation. The most common bases used are 2 (binary logarithm) and 10 (common logarithm), but in computer science we use binary logarithm (base 2).

Here’s a simple explanation of how O(log n) applies to algorithms:

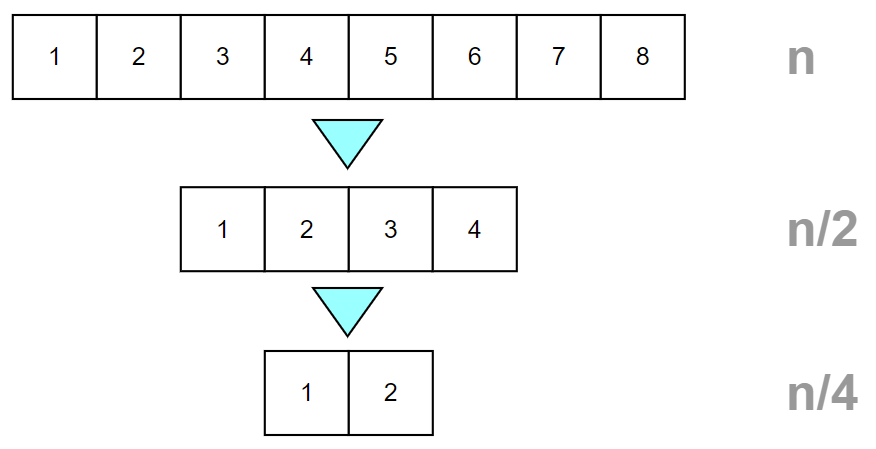

Suppose you have an algorithm that follows a “divide and conquer” approach, such as binary search. Binary search repeatedly divides the search space in half to find a specific element in a sorted list (array). With each iteration, the algorithm reduces the search space by half.

For example, let’s say you have a sorted list with 16 elements. In the first iteration of binary search, you check the middle element, and if it’s not the one you’re looking for, you know whether to search the left half or right half of the list. This reduces the search space to 8 elements.

In the next iteration, you again check the middle element of the remaining 8 elements, and the process continues. With each iteration, the algorithm reduces the search space by half. This behavior is characteristic of O(log n) algorithms.

The number of steps required to complete the binary search is proportional to the logarithm of the number of elements in the list (n). With a list of 16 elements, binary search completes in at most 4 steps (log(base 2) 16 = 4). Even if the list size doubles (32 elements), binary search would only need at most 5 steps (log(base 2) 32 = 5).

As the size of the list grows, the running time of binary search increases much slower compared to linear or quadratic algorithms, making it an efficient algorithm with a time complexity of O(log n).

In other words, an algorithm with a time complexity of O(log n) is considered very efficient because, as the size of the input (n) increases, the running time of the algorithm grows much slower compared to algorithms with higher time complexities like O(n) (linear time) or O(n^2) (quadratic time).

Logarithms are used in various fields of science, engineering, and computer science for tasks like data compression, cryptography, signal processing, complexity analysis of algorithms, and more. In computer science, they play a significant role in determining the time complexity of algorithms like binary search and various divide-and-conquer algorithms.